How Does an Algorithm Align my Teeth?

This year, I finally decided to get my teeth straightened.

I had worn removable aligners as a kid, but given my lack of will-power, I did not wear them consistently enough and my teeth never got straight 100%. This has been bothering me for the last 10+ years (#firstworldproblems).

So, in December 2020, I had my first appointment at the dentist to make all the scans and begin an Invisalign treatment. Invisalign is a custom-made orthodontic system for your teeth, consisting of a set of clear “invisible” and removable aligners. Each week, you receive a new set that gradually helps move your teeth into the desired place. Since I did not want to get old-school braces and look like a 30-year-old Darla in Finding Nemo, Invisalign seemed like a good option.

After the second appointment in January, I walked home with my first set of clear aligners in my teeth, designer-looking Invisalign package in my hands, and disconcertment in my spirit, due lack of information. How does this technology work? How is the teeth trajectory calculated? How accurate is it? While I love my dentist (because he is competent, NOT because he happens to be quite handsome), I did not really get any helpful background information, except for a beautifully marketed Invisalign flyer, informing me that “A lot of powerful technology and doctor’s expertise combine to make a digital plan for shaping your new smile.” 1https://www.invisalign.com/how-invisalign-works/treatment-plan What a great marketing pitch. But what exactly is the “powerful technology” behind it? I decided to investigate a bit.

The Technologies

There is not one technology behind Invisalign, but several. The treatment starts with portrait pictures of your smile, which serve as basis for a 2D facial analysis (or “smile” analysis). I will talk about this step a bit more in the next section.

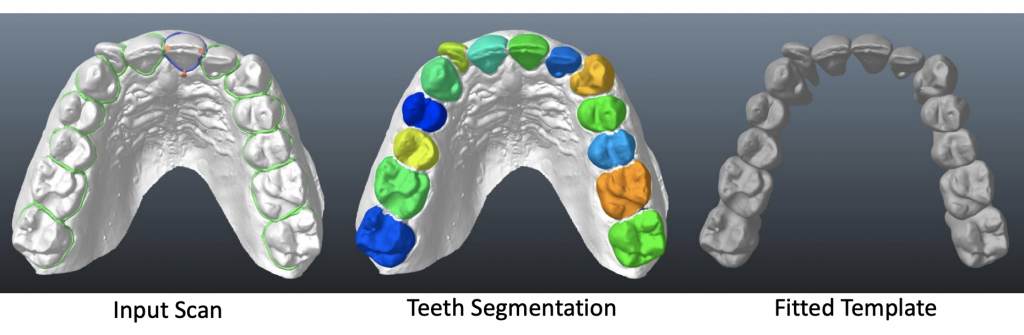

The dentist will then make a scan of your teeth, using the iTero element® scanner. It’s a fancy name for a 3D scanner, based on 3D reconstruction technology coming from the fields of computer vision and computer graphics.2Fun fact: Disney is behind some of the research in teeth reconstruction. Perhaps they want their employees to have as straight teeth as their movie characters? https://la.disneyresearch.com/publication/model-based-teeth-reconstruction/ This technology is based on 2D images: for Invisalign, a hand-held scanner is used, literally stuck in your mouth and moved around. This method is called intraoral digital dental impression scan.

It replaces the old-school mold that they used to stick into your mouth, making you gulp for air (though it has been shown to be less accurate than digital impressions made from such casts4See Flügge et al., 2013).

The method used by the scanner is called parallel confocal imaging, another fancy word, which means that thousands of points of laser light are used to trace, capture, and reconstruct the shape of your teeth.

Doing all of this is not a trivial step: the technology needs to accurately capture your teeth geometry and the gingiva (the junction between your gums and teeth), to be used as basis for planning the treatment plan. It also needs to “guess” where your roots are, since they are not visible. According to a 2003 paper6See Beers et al., 2003 on Invisalign, this step is (or was) done, using simple statistics7A linear model, likely a regression, is fit using four teeth properties, and a cost function is used to find the best fitting model..

I say this step was done this way, as I imagine newer methods are used these days, possible using more advanced methods, that take into account more properties of your teeth and mouth. In the picture below, for instance, you can see a method from 2016, which uses image recognition on a simple picture (no 3D scan), to trace your teeth. Clearly things have been moving since 2003.

So, the first important step is to create an accurate model of your teeth.

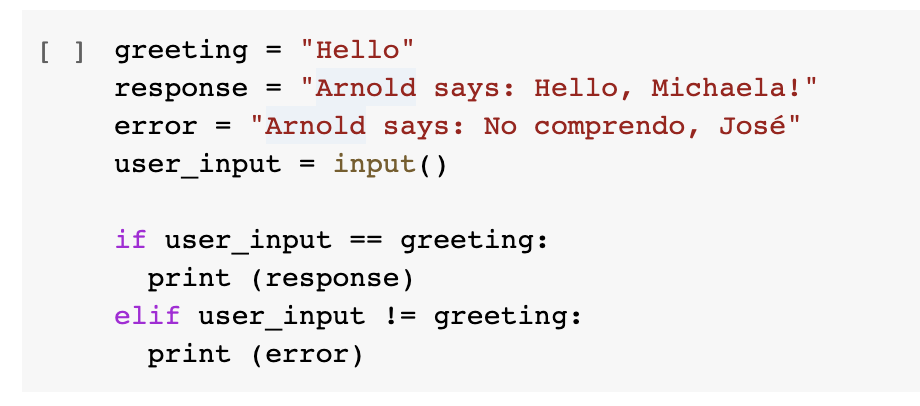

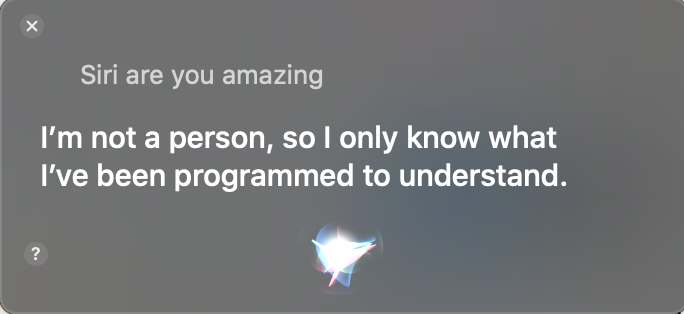

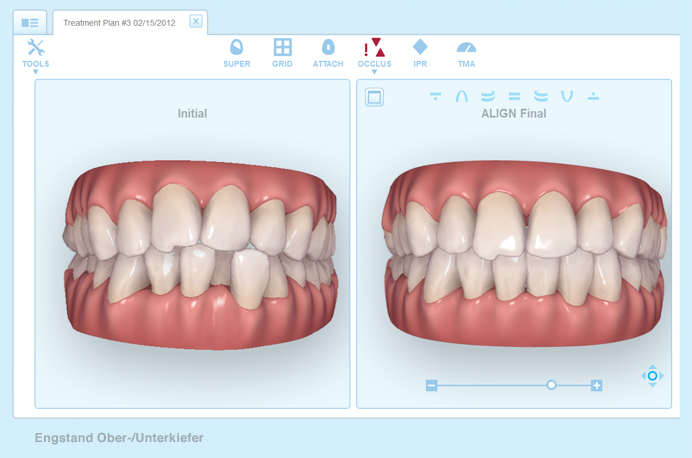

Next, an algorithm is used to calculate the perfect position of your teeth and the difference between the current and perfect state. This is done (according to Invisalign) using their ClinCheck® software. What is behind this software? Invisalign’s website tells us, “The algorithm helps calculate just the right amount of force for every tooth movement”, “The software, with input from your doctor, helps ensure every tooth moves in the right order and at the right time” and the whole thing is “Powered by data from 9 million smiles.” Let’s dig a bit deeper.

“Beauty is in the eye of the beholder” – but who exactly is the beholder?

The first important question to ask is: How does whoever programmed the software (or even the dentist) know, what the teeth formation should ideally look like? What is the “right” amount of movement? What makes the teeth aesthetically pleasing? Zimmerman and Mehl (2015) write in their survey:

“Redesigning the appearance of the anterior [front] teeth in the smile design process is a demanding task, first, because this changes the patient’s smile characteristics and, second, because the esthetic wishes of the patient must be accommodated within the predetermined functional, structural and biological framework.”

Sounds quite complicated. So how does Invisalign accomplish this?

The paper I mentioned from 2003, which gives details on the Invisalign process, does not give a very satisfying answer to this question, except saying that the teeth are placed in “a clinically and esthetically acceptable final position.”9 see Beers et al., 2003 Creating the perfect smile seems to not be an exact science – according to another paper from 2006, “Many scientific and artistic principles considered collectively are useful in creating a beautiful smile.” 10See Davis, 2007 Let’s have a look at some examples.

Aesthetic Principles

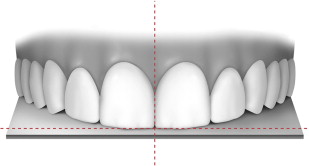

As mentioned earlier, my treatment started with a picture of my smile. To be honest, I originally thought there is some trivial reason for this – perhaps they liked my face and have some sort of internal hall of fame for best smiles achieved. Well, likely that is not the reason, because while researching I came across something called Smile Design. These days, there are many guidelines to achieving a “perfect” smile, and profile pictures provide valuable input to achieve a person’s smile goals.

There are several available systems that have been developed for this purpose, one of which is called Digital Smile System (DSS). A look on their website confirmed that they teamed up with Invisalign.11https://digitalsmiledesign.com/planning-center/dsd-and-invisalign-tps

Four main parts are likely analysed from the pictures: the lipline, the “smile curve,” the shape of your teeth, and certain facial features, such as height and width.

Scientific Principles

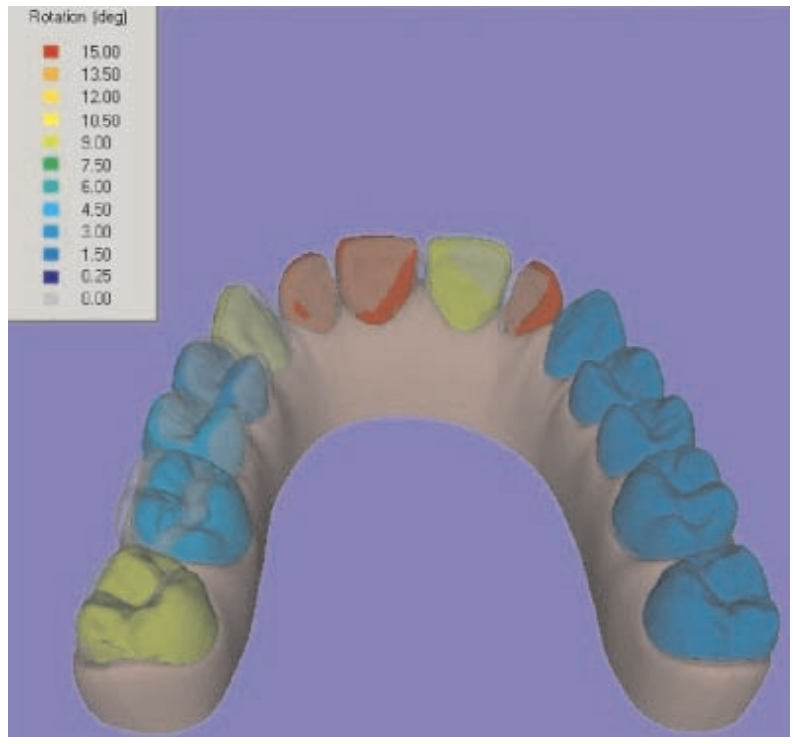

Of course, your smile is not the only thing that is important. By creating a 3D model of your teeth, roots, and gums, the system can also determine what is feasible and what is not. For instance, teeth can only move two ways, by translation or rotation (or a combination). Furthermore, force needs to be applied by taking into account the center of resistance of a tooth. This is similar to the center of gravity from physics: every object has one point, at which it can be balanced, and movement depends on the line in which force is exercised to this point.

To summarise: Invisalign uses aesthetic and scientific principles, just like an orthodontist would, and likely examples of previous outcomes (given they have data from 9 million smiles) to create a realistic “goal” model of your teeth.

Movement Planning

Once we have a model of the existing teeth and a model of the ideal teeth position, it is time to calculate how to get from A to B. The process is called Movement Planning. Movement planning is done, by taking into account how force should be applied, to make each tooth move in the desired way. Importantly, Invisalign aligners can only push, not pull, which needs to be taken into account.

Likely, the software calculates several paths of how to get there, and a clinician or the dentist chooses the best path.

A set of intermediate steps are calculated, which the teeth have to reach with the weekly changing aligners. Invisalign’s software outputs the treatment plan with all the intermediate steps, which the dentist can view and modify, if he or she thinks the teeth will not move the desired way. In the end, virtual planning is not the same as actual, physical planning by a dentist. Nevertheless, many companies are trying to minimise the contact with the dentist, to minimise costs, and leave things up to the software.

Using another technology called SmartStage, the set of aligners are then produced and 3D-printed.

Outcome Analysis

The last part of the process is to make another scan of your teeth and compare the result with the desired result (I have not yet reached this point).

If you actually wore your aligners the recommended 20-22 hours per day, your teeth should now be perfect, your smile bright and wonderful, so you can live happily ever after. Right?

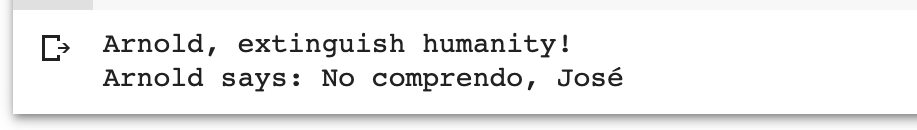

The Bad News

Invisalign was introduced in 1999 by the U.S.-based Align Technology, a manufacturer of 3D digital scanners 14Although they are by no means the first to have the idea of an “invisible” aligner; the first was already introduced by Ponitz in 1971. However, the first scientific clinical study to evaluate its effectiveness was not done until 10 years later, in 2009, after a 2005 systematic review showed that no adequately designed studies could be found and therefore no scientifically valid claims could be made on treatment effect of the aligners.

Subsequent studies, such as by Kravitz et al., 2009 showed that there were significant differences between the proposed virtual results and the actual clinical tooth movements, in some cases showing less than 50% accuracy in the movement. One study from 201515See Hennessy & Al-Awadhi, 2015 concludes by saying:

“Clear aligner clinical usage has not been matched by high-quality research.

Until these data become available, these appliances will continue to be viewed with a degree of scepticism by many orthodontists”

A systematic review from 2018 found that only 3 of the 22 surveyed studies showed a low risk of bias, and concluded by saying:

“Despite the fact that orthodontic treatment with Invisalign® is a widely used treatment option, apart from non-extraction treatment of mild to moderate malocclusions of non-growing patients, no clear recommendations about other indications of the system can be made, based on solid scientific evidence.”16See Papadimitriou et al., 2018

The latest research I found, a paper starting with “Has Invisalign improved?” in the title, from 2020,17See Haouili et al., 2020 concludes that it did improve a bit (i.e. now more around 50% accuracy, yay…), but “Despite the improvement, the weaknesses of tooth movement with Invisalign remained the same.”

You can imagine me at this point in my research, aligners in my mouth, nervous twitch on my face, wondering why I did not think of checking all of this before starting the treatment…

The Good News

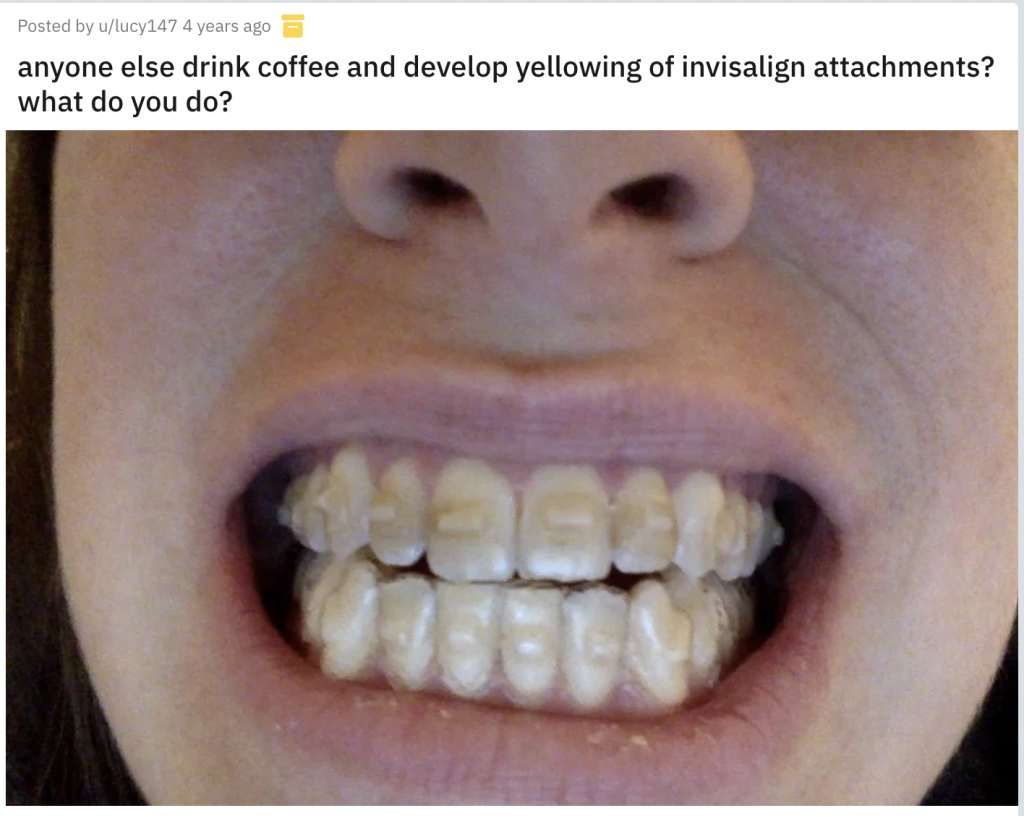

Apparently there were several generations of aligners and the third one got things a bit more right. All of it has to do with how force is applied to your teeth and if that force reaches the center of resistance of each tooth, which is the only way to achieve movement. Removable aligners cannot reach this center of resistance easily, so attachments came to the rescue. Attachments are basically like clear versions of regular brackets, that are glued to your teeth, which nowadays are automatically added by the software in certain cases, like derotations and root movements.

The funny thing of course, is that if you need attachments on every tooth, there is no more big difference between regular brackets and Invisalign in terms of aesthetics, since nowadays regular fixed ceramic brackets can be quite “invisible” as well (no more metal toolbox in your mouth).

But for some more good news: Most papers seem to agree that “light” cases can be successfully treated with Invisalign, and the dentist should also let you know from the beginning, if your case is treatable using this system, or requires the old-school brackets. Since I have the Invisalign Lite version (only minor changes to the front teeth), I should be a very light case (I am more talking to myself, at this point, to help me feel confident).

I recently had to go back to the dentist to get my attachments added. While the first few weeks were easy-peasy, the attachments take some getting used to. I will update this article, once my treatment is complete and add my own experience.

*** UPDATE ***

I am done with my treatment and the outcome is impressive! So, forget everything I said before. Just kidding, here are a few take-aways:

- Teeth tech is cool.

- It’s all about attachments — without those, things will not move the way they should, and of course Invisalign’s ads will not show you that attachments are not as comfy-invisible as just trays.

- After your treatment, you might need an after-treatment of a few weeks (I needed 5 extra weeks) to fix small last issues, which prolongs the whole endeavour.

- After the after-treatment, in order to prevent your teeth from going back to before, you need to a) wear a last pair of trays over night for life, or b) get some wires put in for life. I chose the latter and can tell you, they take some time getting used to (it probably took me 4 weeks to stop being aware they are in my mouth).

- Is it worth it? Absolutely. I am extremely happy with the outcome and have received compliments on my beautiful straight teeth. 🙂

References

Beers, A., Choi, W., & Pavlovskaia, E. (2003). Computer-assisted treatment planning and analysis. Orthodontics & craniofacial research, 6 Suppl 1, 117-25 .

Flügge, T.V., Schlager, S., Nelson, K., Nahles, S., & Metzger, M. (2013). Precision of intraoral digital dental impressions with iTero and extraoral digitization with the iTero and a model scanner. American journal of orthodontics and dentofacial orthopedics : official publication of the American Association of Orthodontists, its constituent societies, and the American Board of Orthodontics, 144 3, 471-8 .

Haouili, N., Kravitz, N., Vaid, N.R., Ferguson, D., & Makki, L. (2020). Has Invisalign improved? A prospective follow-up study on the efficacy of tooth movement with Invisalign. American journal of orthodontics and dentofacial orthopedics : official publication of the American Association of Orthodontists, its constituent societies, and the American Board of Orthodontics.

Hennessy, J., & Al-Awadhi, E. (2016). Clear Aligners Generations and Orthodontic Tooth Movement. Journal of Orthodontics, 43, 68 – 76.

Lagravère MO, Flores-Mir C. The treatment effects of Invisalign orthodontic aligners: a systematic review. J Am Dent Assoc. 2005 Dec;136(12):1724-9. doi: 10.14219/jada.archive.2005.0117. PMID: 16383056.

Liping Zheng; Guangyao Li; Jing Sha (2007). “The survey of medical image 3D reconstruction”. Fifth International Conference on Photonics and Imaging in Biology and Medicine. Proceedings of SPIE. 6534. pp. 65342K–65342K–6. doi:10.1117/12.741321. S2CID 62548928.

Papadimitriou, A., Mousoulea, S., Gkantidis, N., & Kloukos, D. (2018). Clinical effectiveness of Invisalign® orthodontic treatment: a systematic review. Progress in Orthodontics, 19.

Ponitz RJ. Invisible retainers. Am J Orthod 1971; 59: 266 – 272

Kokich, V., Kiyak, H., & Shapiro, P. (1999). Comparing the perception of dentists and lay people to altered dental esthetics. Journal of esthetic dentistry, 11 6, 311-24 .

Kravitz, N., Kusnoto, B., Begole, E., Obrez, A., & Agran, B. (2009). How well does Invisalign work? A prospective clinical study evaluating the efficacy of tooth movement with Invisalign. American journal of orthodontics and dentofacial orthopedics : official publication of the American Association of Orthodontists, its constituent societies, and the American Board of Orthodontics, 135 1, 27-35 .

Smith, R., & Burstone, C. (1984). Mechanics of tooth movement. American journal of orthodontics, 85 4, 294-307.

Zimmermann, Moritz; Mehl, Albert (2015). Virtual smile design systems: a current review. International Journal of Computerized Dentistry, 18(4):303-317.