Artificial Intelligence (AI) is one of those words that is thrown around by everybody and their grandmother these days. If I were to ask you what is AI, what would your answer be? Perhaps you would recall some movies you may have watched, such as Terminator, Her, or Ex Machina, and say AI is one of those evil things that will take over the world and kill people one moment or another.

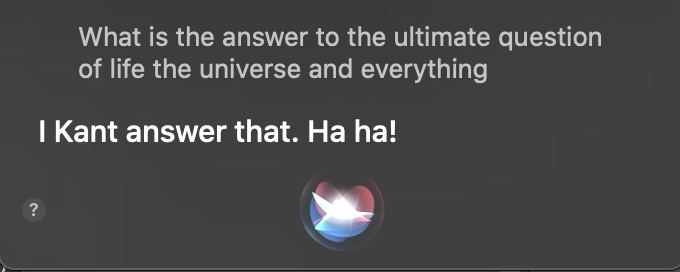

Or, perhaps, you would take a less apocalyptic, sci-fi approach and say, well, it is human intelligence demonstrated by machines. Next I would ask you, and what is intelligence? Then you would perhaps answer, well, intelligence is a wishy-washy concept (in fact, intelligence has long been studied by psychologists, sociologists, biologists, neuroscientists, or philosophers, leading to over 70 definitions1Legg, S., & Hutter, M. (2007). A collection of definitions of intelligence. In B. Goertzel & P. Wang (Eds.) Proceedings of the 2007 conference on advances in artificial general intelligence: Concepts, architectures and algorithms: Proceedings of the AGI workshop 2006 (pp. 17–17). Amsterdam, The Netherlands: IOS Press. and can mean anything from being able to reason, learn, acquire knowledge to being self-aware or emotionally intelligent (kudos to you for such a sensitive answer!). Then I would ask you, and where have you encountered such an intelligence displayed by machines? At which point you might say “Siri is a bit like this? Or maybe Google?”

Ah, now we get to interesting types of technologies. Siri recognises what you say and formulates an answer, which it does by using Natural Language Processing (NLP), a specific type of Machine learning (ML). ML in turn, is a specific type of AI. And back we are to the question, what is AI? (Fear not, we will get back to NLP and ML shortly).

One can take a broad view on AI, where pretty much anything based on statistics is a form of AI. Some companies have used such a broad view to their advantage, by marketing their products as “AI-based” even if they are actually built on simpler regression models or rules. This even has its own term, known as “AI washing.”3https://www.thinkautomation.com/bots-and-ai/watch-out-for-ai-washing/

At the other end of the spectrum, you have those who believe AI should only refer to Artificial General Intelligence, something that comes closer to our Sci-Fi Arnold. Let me give you an example of each.

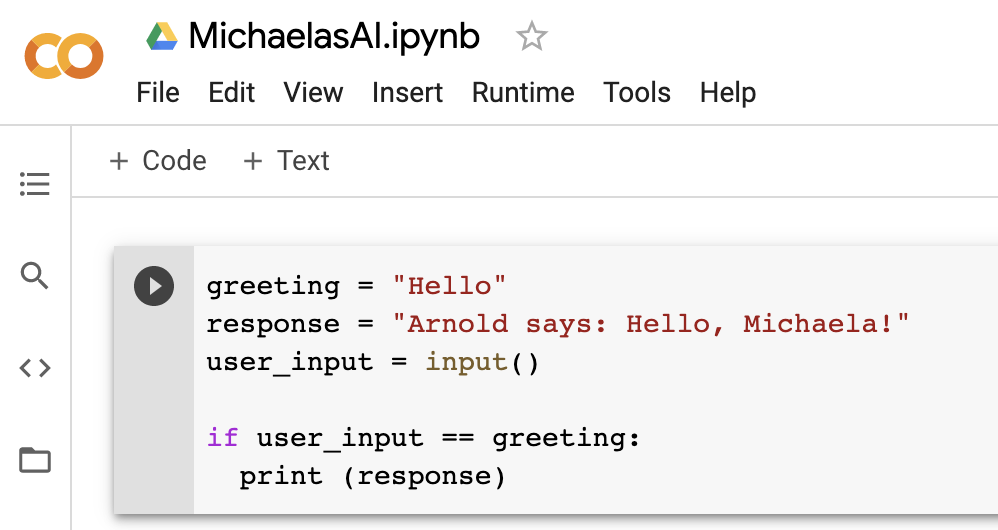

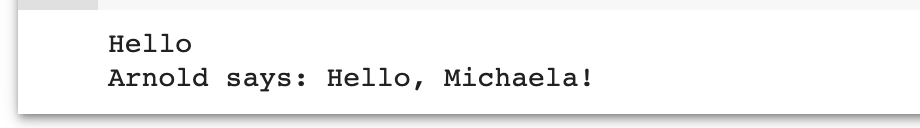

Take Siri, for instance. Siri is currently somewhere in between those two spectrums. What would a simple, rule-based little brother of Siri (let’s call him Arnold) look like? You would take your favourite programming language (mine is Python), and start typing a few lines, like so: if Michaela says “Hello”: then Arnold should respond: “Hello, Michaela!” In Python, it would look something like this:

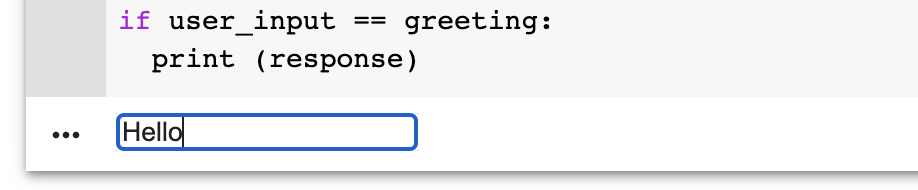

I can now run this script, type in “hello” and get an answer:

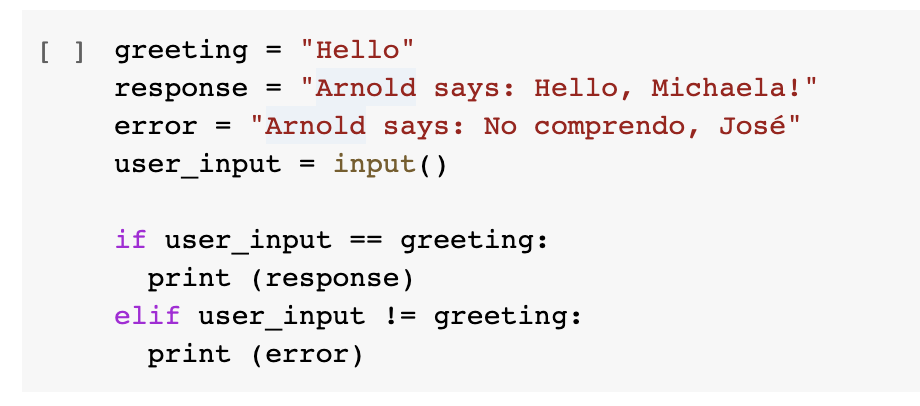

Wow amazing! Although it would also be great, if Arnold knew what to do, if I don’t type exactly the word “hello.”

Will Arnold kill us all soon?

Doesn’t look like it.

This is what you would call a rule-based system (a veeery simple one)4You can try coding this yourself, by going to colab.research.google.com, by the way 🙂. Everything that happens needs to be explicitly coded, otherwise it will generate an error (or go into an endless “No comprendo, José” loop).

Of course, I made it sound highly simplistic, but many companies actually use chatbots based on a rule-type logic and call it “AI-based.” But in terms of intelligence, they might be more similar to a Roomba, one of those cleaning robots that you might have in your home. It will talk at times — but it only talks because it has been instructed to talk at those times, like when returning “home”. It simply checks if a rule applies and follows instructions. No machine learning there and experts in the field would likely not call this AI (although the latest Roomba’s use machine learning to clean, post on that to come!).

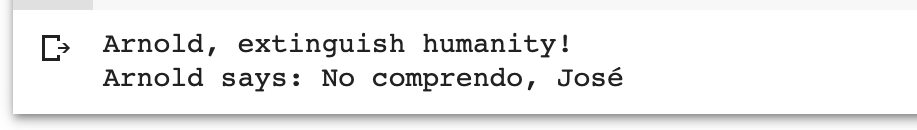

Siri is a bit different.

Even though she tells you that she only understands what she has been programmed to understand, it is not like in our example above. Because Siri can kill you.

Just kidding!

Siri first uses speech recognition technology to translate your speech into text. This means that Siri records the words you say into your microphone, sends them to an Apple server, accesses a database, breaks down your words into teeny-tiny units of sounds called phonemes, uses statistics to figure out the probability of your sequence of phonemes to match one that is recorded in the database, to finally decide if there is a “match” or not. Homophones are especially tricky (i.e., did you say merry, marry, or Mary?), so Siri may also analyse the sentence as a whole, break it down into its linguistic parts-of-speech (such as nouns, verbs, adjectives), and figure out which one of the three words is most likely. You see now why Siri is not happy when you speak to her in Swiss-German or some other dialect? How should poor Siri know that Chuchichäschtli means Küchenschrank (German for cupboard)?

Actually, nice researchers are working on trying to build speech recognition systems that recognise your slang-y kinda language (e.g., Swisscom and the Language and Space Lab at UZH).

Where were we? Right, so now Siri recognised what you said (or not).

Next, she tries to figure out the meaning behind what you said, to know how to proceed. For this she uses Natural Language Understanding (NLU) (a subfield of Natural Language Processing) technology, such as intent classification. Let’s imagine you say “Siri, wake me up at 6am.” After having converted your speech into text, she now uses a machine learning model, trained on a large dataset of sentences with their intents (e.g., for a sentence “Wake me up,” the intent-label might be: “set alarm”. For a sentence “Remind me,” the intent-label might be: “set reminder”), to predict the intent of this sentence.

Once she knows the intent is “set alarm,” or “remind me” she uses something called slot filling. Setting the alarm requires her to know at what time, so she will look for something in the sentence that matches a time. i.e. “6am”. She has been instructed to open the time app on your device and set the alarm to the time you specified. If no time is specified, she will ask you for the missing information. Similarly, for a reminder, she will need to know what the reminder should be.

If you ask her if a duck’s quack can echo, she will recognise that this is a knowledge-type question and that she should query the internet (instead of filling slots). Like so:

You see, a bit more complicated than our “if … then…” statement earlier. And also, much more versatile.

Now we have something that we can safely call AI. Siri “listens” to your speech through your microphone, processes it, learns something from it, and performs an action, all autonomously.5This goes along the line of Russell and Norvig’s definition of Artificial Agents: Russell, S. and Peter Norvig. “Artificial Intelligence – A Modern Approach, Third International Edition.” (2010).

But this is not Artificial General Intelligence (AGI). AGI would imply that Siri would be able to learn how to learn. Right now she still relies on a programmer in the background to provide her with some “if…then…” instructions on how to access which database, and which algorithm to use with which data, to learn something very specific.

Instead, AGI would imply that Siri would figure the above out by herself. In fact, natural language understanding belongs to a class of problems that has been termed “AI-hard,”6An analogy to the NP-hard problem from computer science. which means that if you could solve such a complex problem, you would be at the root of the “AI-problem” itself: it would imply Siri could memorise everything that has been said, take context into account, take decisions autonomously, potentially respond with emotions, and therefore, do what a human can do.

Sounds creepy? I agree.

AGI is not yet on the horizon7But, whether you or me like it or not, research is working towards it, i.e. see Pei, J., Deng, L., Song, S. et al. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature 572, 106–111 (2019). https://doi.org/10.1038/s41586-019-1424-8, and luckily not, because we are currently still far from figuring out what ethics apply when machines are involved and what makes us trust in AIs (I hope to contribute to this line of work in my PhD). This is why you will sometimes see funny experiments like the Moral Machine8https://www.moralmachine.net/, where researchers are interested to see which “lesser evil” people will chose, like killing two passengers or five pedestrians. If we don’t even know what’s right, how should a machine know?

I can see you rolling your eyes at me now, because you made it all the way to this part of the post, are realising now that it is soon ending, and you probably still don’t know what AI is. Let me re-assure you, neither does the research community at times.9Kaplan, A. and M. Haenlein. “Siri, Siri, in my hand: Who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence.” Business Horizons 62 (2019): 15-25. The answer is, it depends on what you consider as intelligent.

More importantly: will Siri kill us? Here the answer is clearly no.

For now. 🙂